Social Network Analysis

This assignment utilized networkx to analyze relationships between nodes in a network.

First I imported the required libraries.

import networkx as nx

import pandas as pd

import numpy as np

import pickle

Part 1 - Random Graph Identification

For the first part of this assignment you will analyze randomly generated graphs and determine which algorithm created them.

P1_Graphs is a list containing 5 networkx graphs. Each of these graphs were generated by one of three possible algorithms:

Preferential Attachment (‘PA’) Small World with low probability of rewiring (‘SW_L’) Small World with high probability of rewiring (‘SW_H’) Anaylze each of the 5 graphs and determine which of the three algorithms generated the graph.

The graph_identification function should return a list of length 5 where each element in the list is either ‘PA’, ‘SW_L’, or ‘SW_H’.

P1_Graphs = pickle.load(open('A4_graphs','rb'))

def graph_identification():

def degree_dist(graph):

degrees = graph.degree()

degree_val = sorted(set(degrees.values()))

histogram = [list(degrees.values()).count(i)/float(nx.number_of_nodes(graph)) for i in degree_val]

return histogram

algorithm = []

for graph in P1_Graphs:

clustering = nx.average_clustering(graph)

shortest_path = nx.average_shortest_path_length(graph)

degree_hist = degree_dist(graph)

if len(degree_hist) > 10:

algorithm.append('PA')

elif clustering < .1:

algorithm.append('SW_H')

else:

algorithm.append('SW_L')

return algorithm

[‘PA’, ‘SW_L’, ‘SW_L’, ‘PA’, ‘SW_H’]

Part 2 - Company Emails

For the second part of this assignment you will be workking with a company’s email network where each node corresponds to a person at the company, and each edge indicates that at least one email has been sent between two people.

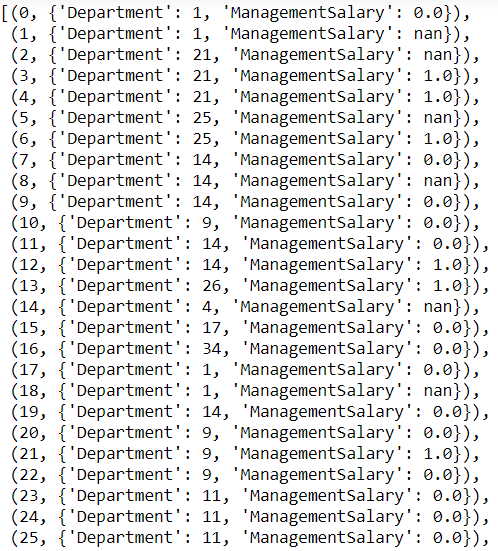

The network also contains the node attributes Department and ManagementSalary.

Department indicates the department in the company which the person belongs to, and ManagementSalary indicates whether that person is receiving a management position salary.

Let’s get a peak at the data.

G = nx.read_gpickle('email_prediction.txt')

G.nodes(data=True)

Part 2A - Salary Prediction

Using network G, identify the people in the network with missing values for the node attribute ManagementSalary and predict whether or not these individuals are receiving a management position salary.

To accomplish this, you will need to create a matrix of node features using networkx, train a sklearn classifier on nodes that have ManagementSalary data, and predict a probability of the node receiving a management salary for nodes where ManagementSalary is missing.

Your predictions will need to be given as the probability that the corresponding employee is receiving a management position salary.

The evaluation metric for this assignment is the Area Under the ROC Curve (AUC).

Your grade will be based on the AUC score computed for your classifier. A model which with an AUC of 0.88 or higher will receive full points, and with an AUC of 0.82 or higher will pass (get 80% of the full points).

Using your trained classifier, return a series of length 252 with the data being the probability of receiving management salary, and the index being the node id.

Example:

</br>1 1.0 </br>2 0.0 </br>5 0.8 </br>8 1.0 </br>_ …_ </br>996 0.7 </br>1000 0.5 </br>1001 0.0 </br>Length: 252, dtype: float64

G = nx.read_gpickle('email_prediction.txt')

def salary_predictions():

# Loading node data into a dataframe.

node_data = np.array(G.nodes(data=True))

df = pd.DataFrame({'ManagementSalary': [d['ManagementSalary'] for d in node_data[:, 1]],

'department': [d['Department'] for d in node_data[:, 1]]},

index=node_data[:, 0])

# Getting metrics to use in classifier.

df['Clustering'] = pd.Series(nx.clustering(G))

df['Degree'] = pd.Series(G.degree())

df['Degree Centrality'] = pd.Series(nx.degree_centrality(G))

df['Closeness'] = pd.Series(nx.closeness_centrality(G, normalized=True))

df['Betweenness'] = pd.Series(nx.betweenness_centrality(G, normalized=True))

df['Page Rank'] = pd.Series(nx.pagerank(G))

# Setting up train and test sets.

train = df[~df['ManagementSalary'].isnull()]

test = df[df['ManagementSalary'].isnull()]

X_train = train.iloc[:,1:]

X_test = test.iloc[:,1:]

y_train = train['ManagementSalary']

# Fitting the GBC

from sklearn.ensemble import GradientBoostingClassifier

clf = GradientBoostingClassifier(random_state=0)

clf.fit(X_train, y_train)

# Getting probabilities

test_prob = pd.Series(clf.predict_proba(X_test)[:, 1], index= X_test.index)

return test_prob

1 0.005034

2 0.987573

5 0.987976

8 0.117562

14 0.053312

18 0.021946

27 0.028183

30 0.902317

31 0.137383

34 0.018777

37 0.020159

40 0.016472

45 0.005846

54 0.174362

55 0.538832

60 0.080264

62 0.988456

65 0.987422

77 0.008042

79 0.017314

97 0.009253

101 0.003136

103 0.562415

108 0.010781

113 0.063559

122 0.001791

141 0.166784

142 0.988456

144 0.012987

145 0.698570

…

913 0.009200

914 0.010464

915 0.001552

918 0.021805

923 0.016919

926 0.028838

931 0.007891

934 0.001371

939 0.001133

944 0.001504

945 0.016919

947 0.028178

950 0.018011

951 0.004870

953 0.004007

959 0.001049

962 0.001615

963 0.048345

968 0.025535

969 0.033527

974 0.017409

984 0.001910

987 0.026094

989 0.028178

991 0.026421

992 0.001003

994 0.001504

996 0.001219

1000 0.004840

1001 0.019469

Length: 252, dtype: float64

- AUC score was 0.9461740694617408.

Part 2B - New Connections Prediction

For the last part of this assignment, you will predict future connections between employees of the network. The future connections information has been loaded into the variable future_connections. The index is a tuple indicating a pair of nodes that currently do not have a connection, and the Future Connection column indicates if an edge between those two nodes will exist in the future, where a value of 1.0 indicates a future connection.

future_connections = pd.read_csv('Future_Connections.csv', index_col=0, converters={0: eval})

Using network G and future_connections, identify the edges in future_connections with missing values and predict whether or not these edges will have a future connection.

To accomplish this, you will need to create a matrix of features for the edges found in future_connections using networkx, train a sklearn classifier on those edges in future_connections that have Future Connection data, and predict a probability of the edge being a future connection for those edges in future_connections where Future Connection is missing.

Your predictions will need to be given as the probability of the corresponding edge being a future connection.

The evaluation metric for this assignment is the Area Under the ROC Curve (AUC).

Your grade will be based on the AUC score computed for your classifier. A model which with an AUC of 0.88 or higher will receive full points, and with an AUC of 0.82 or higher will pass (get 80% of the full points).

Using your trained classifier, return a series of length 122112 with the data being the probability of the edge being a future connection, and the index being the edge as represented by a tuple of nodes.

Example:

</br>(107, 348) 0.35 </br>(542, 751) 0.40 </br>(20, 426) 0.55 </br>(50, 989) 0.35 </br>_ …_ </br>(939, 940) 0.15 </br>(555, 905) 0.35 </br>(75, 101) 0.65 </br>Length: 122112, dtype: float64

def new_connections_predictions():

from sklearn.ensemble import GradientBoostingClassifier

# Added metrics to be used in the classifier.

common_neighbors = [len(list(nx.common_neighbors(G, edge[0], edge[1]))) for edge in future_connections.index]

jaccard_coefficient = [item[2] for item in list(nx.jaccard_coefficient(G, ebunch=future_connections.index))]

resource_allocation_index = [item[2] for item in list(nx.resource_allocation_index(G, ebunch=future_connections.index))]

adamic_adar_index = [item[2] for item in list(nx.adamic_adar_index(G, ebunch=future_connections.index))]

preferential_attachment = [item[2] for item in list(nx.preferential_attachment(G, ebunch=future_connections.index))]

future_connections['Common Neighbors'] = common_neighbors

future_connections['Jaccard Coefficient'] = jaccard_coefficient

future_connections['Resource Allocation Index'] = resource_allocation_index

future_connections['Adamic Adar Index'] = adamic_adar_index

future_connections['Preferential Attachment'] = preferential_attachment

# Setting up training and test sets.

train = future_connections[~future_connections['Future Connection'].isnull()]

test = future_connections[future_connections['Future Connection'].isnull()]

X_train = train.iloc[:,1:]

X_test = test.iloc[:,1:]

y_train = train.iloc[:,0]

# Fitting the GBC

clf = GradientBoostingClassifier(random_state=0)

clf.fit(X_train, y_train)

# Getting probabilities

test_prob = pd.Series(clf.predict_proba(X_test)[:, 1], index= X_test.index)

return test_prob

(107, 348) 0.030342

(542, 751) 0.013050

(20, 426) 0.539323

(50, 989) 0.013050

(942, 986) 0.013050

(324, 857) 0.013050

(13, 710) 0.118869

(19, 271) 0.149760

(319, 878) 0.013050

(659, 707) 0.013050

(49, 843) 0.013050

(208, 893) 0.013050

(377, 469) 0.013792

(405, 999) 0.019812

(129, 740) 0.021756

(292, 618) 0.039721

(239, 689) 0.013050

(359, 373) 0.013792

(53, 523) 0.029697

(276, 984) 0.013050

(202, 997) 0.013050

(604, 619) 0.081836

(270, 911) 0.013050

(261, 481) 0.062892

(200, 450) 0.906969

(213, 634) 0.013050

(644, 735) 0.142963

(346, 553) 0.013050

(521, 738) 0.013050

(422, 953) 0.018552

…

(672, 848) 0.013050

(28, 127) 0.963145

(202, 661) 0.013050

(54, 195) 0.997535

(295, 864) 0.013050

(814, 936) 0.013050

(839, 874) 0.013050

(139, 843) 0.013050

(461, 544) 0.013467

(68, 487) 0.013467

(622, 932) 0.013050

(504, 936) 0.016148

(479, 528) 0.013050

(186, 670) 0.013050

(90, 395) 0.088466

(329, 521) 0.024649

(127, 218) 0.198212

(463, 993) 0.013050

(123, 142) 0.805473

(764, 885) 0.013050

(144, 824) 0.013050

(742, 985) 0.013050

(506, 684) 0.013050

(505, 916) 0.013050

(149, 214) 0.962314

(165, 923) 0.013467

(673, 755) 0.013050

(939, 940) 0.013050

(555, 905) 0.013050

(75, 101) 0.016076

Length: 122112, dtype: float64

- AUC score was 0.9102170953769284.